Homework 5

Homework 5

Due: Wednesday, 4/16 at 10pm

Overview

In this assignment, you will explore branch predictors and the consequences of speculative execution. You will implement a hybrid branch predictor that chooses among five existing branch predictor implementations (including state-of-the-art predictors) and analyze whether the increased complexity is worth it. You will also read about and ponder two alternative speculative execution mechanisms.

Stencil code

Get started by pulling the latest changes to the assignments repo! The config file for the hybrid predictor is found in configs/assignments/branchpred/bp-assignment.py and the Microbenchmark binaries are found in tests/test-progs/microbench-riscv.

Submission and grading

You will upload a written and programming component to one Gradescope assignment. The written component should be uploaded as a PDF. For the code, upload hybrid.cc and hybrid.hh.

Part 1: Hybrid Branch Predictor

In class, we learned about a tournament branch predictor, which keeps a 2-bit saturating counter for each branch. If the counter is, for example, 00 or 01, the branch uses the global predictor (based on the outcome history of all recent branches). However, if the counter is 10 or 11, the branch uses the local predictor (which itself is a 2-bit saturating counter where the bits encode Strong/Weak Taken/Not Taken, as we saw in class). Once the branch outcome is known, the saturating counter is updated towards the more accurate predictor (for example, if the saturating counter is 01, and the global predictor ends up incorrect but the local predictor ends up correct, the saturating counter is updated to 10, which means the branch will switch to using the local predictor on the next iteration. In the opposite case, the saturating counter is updated to 00, which means the branch will continue using the global predictor and will require two mispredictions of the global predictor to switch to the local predictor).

The idea behind this scheme is that each branch adapts to using the branch predictor that has worked well for it. Using 2-bit saturating counters in this way cuts down on transistor usage and also hardware complexity, while still allowing for pretty stark gains in accuracy over a local predictor. In CS1952y homeworks, however, we are unburdened by such constraints as “transistor usage.” Let’s use the full simulation capabilities of gem5 to explore a much more souped up hybrid branch predictor that chooses between 5 predictors for each branch.

The stencil code, found in src/cpu/pred/hybrid.{cc, hh}, holds five predictors that already come with gem5. The configuration in src/cpu/pred/BranchPredictor.py (which you will not be changing) initializes each predictor with the default parameters. To work with the gem5 CPUs, a class that inherits from BPredUnit needs to implement four methods: lookup, update, updateHistories, and squash. You can see how these methods are called by a branch predictor unit in src/cpu/pred/bpred_unit.cc. Most importantly, when lookup is called, each branch predictor can create a pointer to some sort of history object for each branch, which the caller code then passes back to update the predictor on the next action (update, speculative update, or squash). The provided HybridBP currently wraps a local predictor and shows how this data structure is created and destroyed.

If you run the provided code, you should see the same branch predictor accuracy (system.cpu.branchPred.condIncorrect / system.cpu.branchPred.condPredicted in stats) for the hybrid BP as the local BP (to switch between branch predictors in the config script, use the flag --branchpred).

Modify the HybridBP class to use all five predictors, instead of just the one. Each implementation of the four BP methods should call that same method on all five predictors, to allow them to keep track of internal state (similar to how each method makes the calls on localBP) – essentially running the five predictors in parallel. In lookup, your implementation should return the prediction of the branch predictor that has been the most accurate so far for that branch. This means that you should create data structure(s) to track how each predictor does on each branch by keeping a history of at least the last 8 times the branch predictor has encountered each branch. You can also modify PredHistPtrContainer to keep track of any history you need. lookup should increment the stat counter for whichever branch predictor was used to return the result for the given branch at the given moment. This means that the five statistics {local, tournament, bimode, tage, perceptron}Preds should add up to condPredicted in the end.

There’s a lot of flexibility in how to implement this – we’ll be looking to see that you’re actually running the branch predictors in parallel and that you’re returning the branch decision based on the branch history. We’re also looking for you to be strategic in handling branches that do not yet have an established history.

Part 2: Analysis of the branch predictors

Run each of the predictors (hybrid, and the five provided as standalones) on the CCa, CCm, and CRm benchmarks (the descriptions of them are given in the Microbenchmark repository that we pulled them from). Which predictor performs the best? Is this surprising?

In your PDF file, answer the following questions:

A: You probably noticed that the hybrid predictor performs worse than a standalone predictor that comes with gem5 (if not, let us know! We might be missing something in our reference implementation that you’ve cracked). Where do you think this discrepancy is coming from (remember, you’re being strategic about branches that do not have an established history, so the effect probably isn’t just coming from “untrained” branches)? Your answer should demonstrate understanding of how the existing branch predictors track history in gem5.

B: As we’ll see in class, it’s getting a bit harder to cram more and more transistors on a board, due to manufacturing capabilities, quantum effects at small scales, and power/energy constraints. Nevertheless, scientists and engineers keep coming up with new technologies to surmount what seemed impossible mere years ago. Let’s say we come up with a new technology that allows us to increase the complexity of our circuitry in a way where we can actually afford to implement our hybrid BP in hardware (still at some power/energy/space/$$$ cost). Do you think it would be worth it to add this technology to an existing OOO speculative superscalar CPU, or would the technology be better spent trying to improve throughput/latency in some other way? Regardless of your opinion, in your answer, give at least two examples of how a theoretical large increase circuit complexity could get around some of the limits of ILP we’ve seen in class, and be creative (don’t just say “larger cache” or “more functional units.” Can we move things around? If we wanted to make just one or two things in our CPU circuit fast, what would they be?)

C: Variations on the TAGE predictor (such as LTAGE, used in this assignment, or LTAGE with statistical correlator, provided by gem5) are considered to be the ones to beat in modern branch predictor competitions (a real thing). This might not match your observations when running the benchmark programs. Based on the TAGE paper (feel free to find other sources that describe the predictor instead, and cite them), where do you think this mismatch comes from? Your answer might consider the provided benchmarks, the parameters available to configure TAGE, your understanding of gem5, or any other ideas you think of, but should demonstrate understanding of the underpinnings of the TAGE predictor.

Part 3: Alternatively speculative execution

As you saw in the ISA assignment, timing side channels based on application behavior can be used to leak secrets if the application has some input dependent branch, and one side of the branch has one form of instruction a and the other side of the branch has instruction b. But you may have noticed that the modifications required to defend against that kind of leakage required a lot of work. Furthermore, multiplication instructions that weren’t processing on secret data suffered in order to eliminate this leakage. After much thought, you decide there must be a better way. You stumble across this paper which proposes, when your processor comes across a branch, alternating between both sides of the branch in order to avoid leaking the timing of one side of the branch versus the other. You wonder if the compiler really needs to get involved here, so you also hatch an idea to use hardware threads (harts) to run both branch outcomes in parallel. You wonder which one would work better.

In the SemPE protocol, the compiler divides the assembled code into “segments” on conditional control instructions, and the CPU executes both segments (not taken first). To do achieve this, a special hardware table is deployed in the processor with two cells for each of these instructions – one for taken, one for not taken. The table stores the destination address (the end of the code segment) and tells the program counter logic how it should be updated upon reaching the end of a code segment block.

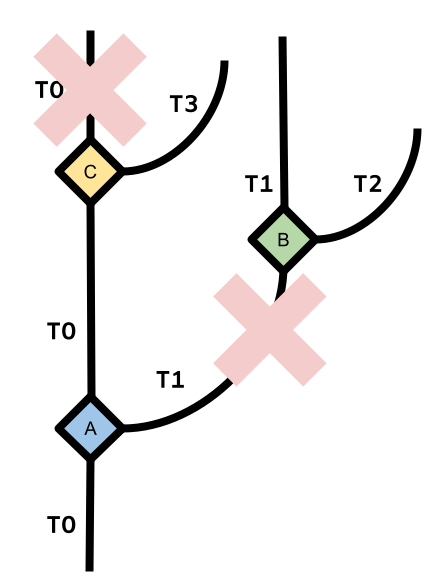

On the flip side, the (theoretical) P3 (Paranoid Prediction Processor) would spawn a new hart for each conditional control instruction, and have each hart execute an assigned side of the branch, as in the diagram below. Both harts execute their side of the branch in parallel using the ILP techniques we saw in class. When the control logic resolves the condition, the hart for the incorrect side of the branch is squashed and killed. The hart on the correct side of the branch continues executing as if nothing happened.

Answer the following questions about the protocols:

A: This question asks you to consider the implications of target mispredictions as opposed to outcome mispredictions (remember that the target is the address of the next instruction to be executed. We talked about Branch Target Buffers, or BTBs as a way of caching target predictions in class). In both parts, provide code examples (don’t have to be long) and/or diagrams to support your answer. * Is there a situation where a target misprediction would have implications on the SeMPE protocol? * Are there situations where a target misprediction in P3 would require killing a hart without having to spawn a new one? What about killing a hart and having to spawn a new one?

B: Consider the diagram of P3 execution above. Are there any cases where the true condition of branch C would be evaluated before branch B is reached in an O3 processor? What would be the implications of this case?

C: In an OOO implementation of the P3, what needs to happen on a branch? Be detailed about the information that the newly spawned hart needs to track, and make sure you account for control flow correctness of multiple sequential branches and data flow correctness when multiple harts are executing in parallel and when a hart needs to be squashed. Also explain whether we need to check for any structural hazards when encountering a branch.

D: Compare and contrast the trade-offs incurred by the SeMPE and the hart-spawning processor. If you were concerned about timing side-channel threat model, which proposed protocol would be more compelling to you? Explain why in 2-3 sentences.

E: Read the Introduction of the SeMPE paper. Are you convinced by the need for this approach in hardware? Do you think that there are reasonable use-cases for such a CPU?